Ironman Software Forums

Continue the conversion on the Ironman Software forums. Chat with over 1000 users about PowerShell, PowerShell Universal, and PowerShell Pro Tools.

Discuss this Article

Discuss this ArticleWe hope to start to provide some insights into how Ironman Software engineers our products. We’ll be posting about our processes, tools and techniques. We hope you find them useful.

As PowerShell Universal has evolved, it’s greatly increased in complexity. From the start it was built on PowerShell 7, ASP.NET and React. With each release we’ve added more and more features. Even when features are isolated to a single component, they still have to be tested across the entire platform. Additionally, PSU has always been cross-platform. This makes it difficult to test in all environments.

Until recently, we were maintaining several different CI/CD pipelines to test PowerShell Universal on pre-build GitHub agents. We had a Windows pipeline that ran on Windows Server 2019. We had a Linux pipeline that ran on Ubuntu 20.04. And they were flaky.

Each build would require us tearing down the existing environment and building a new one. This was slow process and often times would fail or leave behind relics of the previous test. We’d have to restart the build and hope it worked. It often resulted in unwarranted confidence in our builds or no confidence at all in the test process. This led to bugs and regressions and a lot of wasted time.

We’ve been using Docker for a while to build our images. Recently, we started publishing preview Docker images for our nightly releases. Since these are built nightly, we can leverage them to run our integration tests. Each evening, our GitHub nightly builds will run and build all the PSU flavors and publish several of them to Docker Hub.

Once complete, we pull the images on our test servers to run integration tests against each platform. This post will outline how we accomplish this and will give you some ideas on how to validate PSU in your environments.

When the nightly build runs, it produces all the binaries for platforms we support. It then ZIPs the resulting artifacts up and stores them in an Azure Blob. This is where you download nightly builds from when visiting IronmanSoftware.com.

From there, we run two GitHub actions to build and publish the Docker images to Docker Hub; one for Linux and one for Windows. The action to build the images is pretty simple. It checks for the latest nightly build and runs a Docker build command. Here’s an example of our GitHub action YAML. It’s mostly calling our PS scripts.

name: Master - Preview - Windows - Docker

on:

workflow_run:

workflows: ["Master - Nightly"]

types:

- completed

workflow_dispatch:

jobs:

build:

if: ${{ github.event.workflow_run.conclusion == 'success' }}

name: Build

runs-on: windows-latest

steps:

- uses: actions/checkout@v1

with:

ref: "master"

- name: Build Docker Image

run: .\src\docker\windows-preview\build.ps1

env:

RUNID: ${{ github.event.workflow_run.id }}

shell: pwsh

- name: Push Docker Image

run: .\src\docker\windows-preview\push.ps1

env:

dockerpassword: ${{ secrets.DOCKER_PASSWORD }}

If the build and unit tests were successful, this action will run. The build.ps1 file in windows-preview will build the image. As you can see, it saves some modules, finds the latest nightly build, and then runs docker build with the URL parameter.

Set-Location $PSScriptRoot

$Version = (Get-Content "$PSScriptRoot/../../../version.txt" -Raw).Trim()

Write-Host "Saving modules..."

Save-Module -Name 'Az.KeyVault' -RequiredVersion '4.10.2' -Path $PSScriptRoot\Modules -Force -Verbose

Save-Module -Name 'Az.Compute' -RequiredVersion '6.2.0' -Path $PSScriptRoot\Modules -Force -Verbose

Save-Module -Name 'Az.Resources' -RequiredVersion '6.9.1' -Path $PSScriptRoot\Modules -Force -Verbose

Save-Module -Name 'Invoke-SqlCmd2' -Path $PSScriptRoot\Modules -Force -Verbose

Save-Module -Name 'dbatools' -Path $PSScriptRoot\Modules -Force -Verbose

Save-Module -Name 'PSScriptAnalyzer' -Path $PSScriptRoot\Modules -Force -Verbose

[xml]$Builds = (Invoke-WebRequest "https://imsreleases.blob.core.windows.net/universal-nightly?restype=container&comp=list").Content.Substring(3)

$Latest = $Builds.EnumerationResults.Blobs.Blob | Where-Object { $_.Name -like "*.win7-x64.$Version*" } | ForEach-Object {

[PSCustomObject]@{

Url = $_.Url

LastModified = [DateTime]$_.Properties['Last-Modified'].'#text'

}

} | Sort-Object LastModified -Descending | Select-Object -First 1

Write-Host "Downloading $($Latest.Url)"

docker build . --tag=universal --build-arg="URL=$($Latest.Url)"

From there, we run a dockerfile. This dockerfile isn’t too complicated. It downloads and unzips the latest build and sets the entry point to the Universal.Server.exe executable. Our images are based on the Microsoft PowerShell images.

ARG fromTag=lts-windowsservercore-ltsc2022

ARG WindowsServerRepo=mcr.microsoft.com/powershell

# As this is a multi-stage build, this stage will eventually be thrown away

FROM ${WindowsServerRepo}:${fromTag} AS installer-env

ARG URL=nothing

SHELL ["C:\\Windows\\System32\\WindowsPowerShell\\v1.0\\powershell.exe", "-command"]

RUN $url = $env:URL; `

Write-host "downloading: $url"; `

[Net.ServicePointManager]::SecurityProtocol = [Net.ServicePointManager]::SecurityProtocol -bor [Net.SecurityProtocolType]::Tls12; `

Invoke-WebRequest -Uri $url -outfile /universal.zip -verbose ; `

Expand-Archive universal.zip -DestinationPath .\Universal; `

New-Item -Path C:\ProgramData\UniversalAutomation -ItemType Directory -Force;

FROM ${WindowsServerRepo}:${fromTag}

ENV ProgramData="C:\ProgramData"

COPY --from=installer-env ["\\Universal\\", "$ProgramData\\Universal"]

COPY ["/Modules", "$ProgramData\\Universal\\Modules"]

# Set the path

RUN setx /M PATH "%ProgramData%\Universal;%PATH%;"

EXPOSE 5000

ENTRYPOINT ["C:/ProgramData/Universal/Universal.Server.exe"]

Once this is complete, we publish the build to Docker Hub.

Once the images have been published, we invoke some actions to test them. We have two Docker hosts. One is for Windows and one is for Linux. We have a GitHub action for each. Here is the YAML files for our Windows tests. As you can see, we install some modules and then invoke our test.

name: Master - Windows Integration Tests

env:

ACTIONS_ALLOW_UNSECURE_COMMANDS: true

on:

workflow_dispatch:

workflow_run:

workflows: ["Master - Preview - Windows - Docker"]

types:

- completed

jobs:

build:

name: Test

runs-on: [self-hosted, windows-docker]

steps:

- uses: actions/checkout@v1

- name: Install PSPolly

run: Install-Module PSPolly -Force -Scope CurrentUser

shell: pwsh

- name: Install InvokeBuild

run: Install-Module InvokeBuild -Force -Scope CurrentUser

shell: pwsh

- name: Install Pester

run: Install-Module Pester -Force -Scope CurrentUser

shell: pwsh

- name: LiteDB - Windows

run: Invoke-Build -File .\test\test.build.ps1 -Task TestLiteDb -Platform windows

We have our own agents running on local Intel Nuc devices with Docker installed. One set is setup for Windows containers and one is setup for Linux containers so that we can run in parallel.

In our test scripts, we pull the latest images and then run against several sets of basic persistence configurations. These include LiteDB, SQLite and SQL. We also have an extensive set of configuration files that we load into each environment. Here’s an example of how we load out our LiteDB containers. While not the most elegant (we’re working on getting there!), it’s effective. We pull the proper image, set some environment variables and go from there.

$Env:Configuration = "LiteDB"

if ($Platform -eq 'linux') {

$Logs = "$PSScriptRoot/Logs".Replace("C:\", "c:/").Replace("\", "/")

$RepositoryPath = "/home/repository"

$LogPath = "/home/logs/"

}

else {

$Logs = "$PSScriptRoot\Logs"

$RepositoryPath = $RepositoryDirectory

$LogPath = $LogsDirectory

}

New-Item $Logs -ItemType Directory -Force | Out-Null

$Image = "ironmansoftware/universal:$Version-preview-modules"

if ($Local) {

$Image = "universal"

}

elseif ($Platform -eq 'Windows') {

$Image = "ironmansoftware/universal:$Version-preview-windowsservercore-ltsc2022"

}

docker create -p 5000:5000/tcp `

--name PSULiteDb `

-e "Data__RepositoryPath=$RepositoryPath" `

-e "Plugins__0=UniversalAutomation.LiteDBv5" `

-e "Plugins__1=PowerShellUniversal.Language.CSharp" `

-e "NodeName=PSU1" `

-e "SystemLogPath=$($LogPath)psu1_systemLog.txt" `

--mount "type=bind,source=$($Logs),target=$LogPath" `

$Image

# Assuming Windows Host

if ($Platform -eq 'Windows') {

docker cp "$PSScriptRoot\assets" "PSULiteDb:C:\ProgramData\UniversalAutomation"

}

else {

docker cp "$PSScriptRoot\assets\Repository" "PSULiteDb:$RepositoryPath"

}

docker start PSULiteDb

Once our container is up and running, we start running out tests.

With the container running, we wait for the PSU service to startup and then issue and App Token we can use throughout our tests. This function checks to see if PSU is running and then logins and issues a new token. We have an internal module to issue a new license to ensure all features are enabled during testing.

function Wait-Universal {

$Retries = 0

while ($true) {

try {

$State = Invoke-UniversalRestMethod -Path api/v1/alive -Anonymous

Start-Sleep -Seconds 1

if (-not $State.Loading) {

break

}

}

catch {

Start-Sleep -Seconds 1

Write-Host "Waiting for Universal to start..."

$Retries++

if ($Retries -gt 30) {

throw $_

}

}

}

try {

$ENV:TestAppToken = (Invoke-UniversalRestMethod -Path api/v1/apptoken/grant -UserName 'admin' -Password 'admin').Token

Connect-PSUServer -AppToken $ENV:TestAppToken -ComputerName $Env:UniversalUrl

Import-Module "$PSScriptRoot\..\tools\Licensing\Licensing.psd1"

$License = Get-IMSLicense -Type PowerShellUniversal -Name Test

Set-PSULicense -Key $License

}

catch {

Write-Error $_

}

}

The Invoke-UniversalRestMethod function just ensures that we are using the same URL throughout the tests. We are still updating tests to ensure this is consistent.

All our tests are written in Pester. We expose environment variables and have a test module used to invoke actions against our PSU service.

Describe "Computer" {

Context "Maintenance Mode" {

It "should return correct status when maintenance mode enabled (GET)" {

Get-PSUComputer | Set-PSUComputer -Maintenance $true

try {

Invoke-WebRequest http://localhost:5000/api/v1/status

}

catch {

$_.Exception.Response.StatusCode | Should -be 503

}

}

Some of our recent tests require that we run them within the target PSU service due to platform differences. We’ve introduced an Invoke-TestCommand cmdlet to call an endpoint in PSU. As you can see below, we have a script block we can invoke.

Describe "Git" {

Context "Basic" {

BeforeAll {

$TempPath = Invoke-TestCommand -ScriptBlock {

$TempPath = [IO.Path]::GetTempPath()

$TempPath = (Join-Path $TempPath "PSUGitTest").Replace("\", "/")

New-Item $TempPath -ItemType Directory -Force -ErrorAction SilentlyContinue

}

The implementation just turns it into a string an invokes a PSU endpoint.

function Invoke-TestCommand {

param(

[Parameter(Mandatory = $true, Position = 0)]

[ScriptBlock]$ScriptBlock

)

Invoke-RestMethod "$Env:UniversalUrl/invokeexpression" -Body ($ScriptBlock.ToString()) -Method POST

}

The endpoint definition looks like this.

New-PSUEndpoint -Url /invokeexpression -Method POST -Endpoint {

try {

Invoke-Expression $Body

}

catch {

Write-PSULog $_.Exception.StackTrace

throw $_

}

}

While this works, the errors aren’t great so we’re working on improving them. It typically just returns a 400 error in Pester without too much detail unless you look at the logs.

We have over 1000 tests that we run on a nightly basis. With each feature added and bug fixed, we add new tests.

Many of our tests are run against multiple environments in the same PSU instance. We leverage Pester’s -ForEach functionality to ensure we have the same behavior im many different environments.

if ($Env:Platform -eq 'windows') {

$Environments = @("integrated", "pwsh", "powershell");

}

else {

$Environments = @("pwsh", "integrated");

}

Describe "API.<_>" -ForEach $Environments {

BeforeAll {

Once all tests have finished we report results.

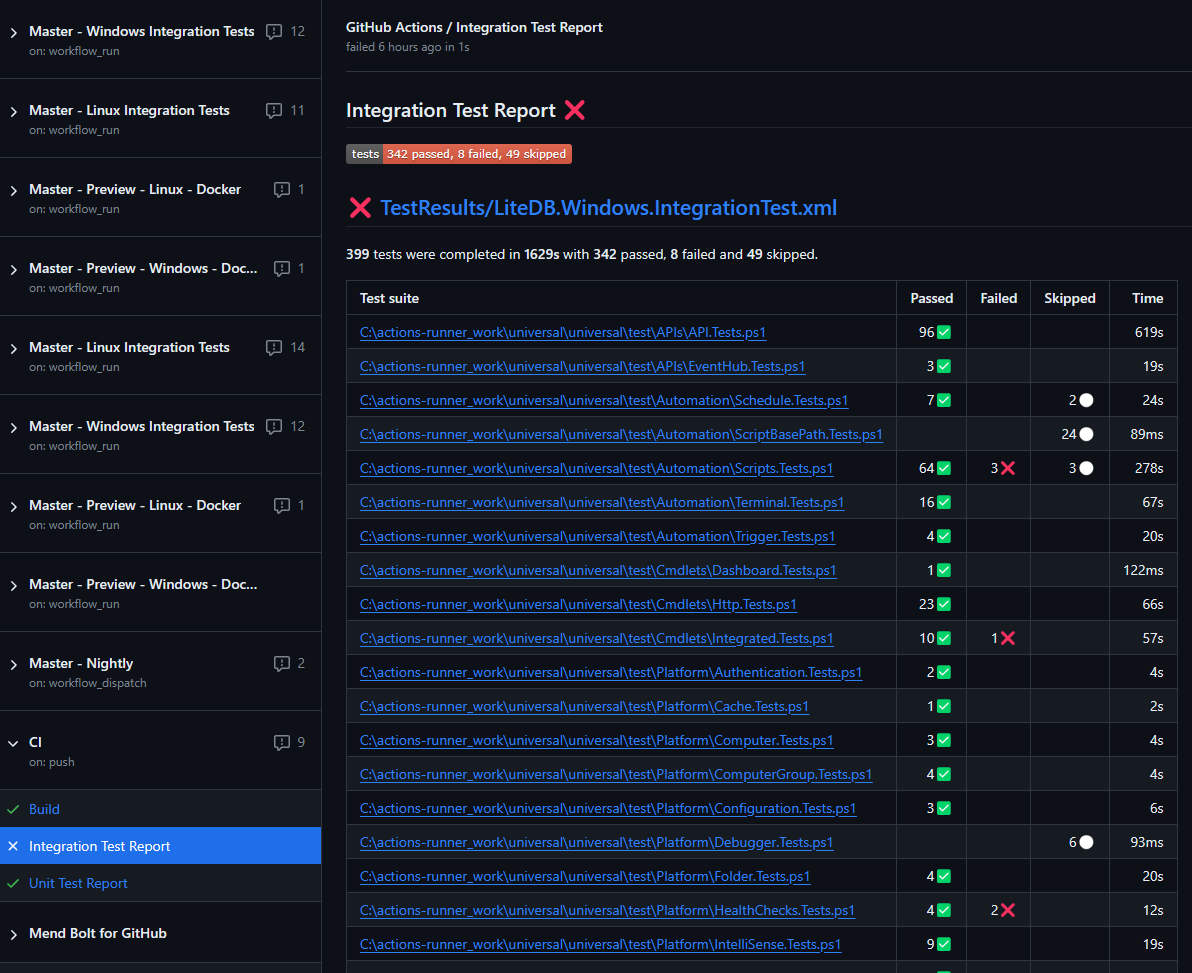

Once tests are complete, we use the dorny/test-reporter@v1 action to report the results back to GitHub. This is a pretty simple action to use. We just point it to the XML file that Pester produces.

- name: Report

uses: dorny/test-reporter@v1

if: success() || failure()

with:

name: Integration Test Report

path: 'TestResults/LiteDB.Windows.IntegrationTest.xml'

reporter: java-junit

The result is a nice test result dashboard in GitHub.

We’ve found running our tests against Docker images to be much more reliable than running them on pre-built GitHub agents. In addition, we can scale it much further to reduce our run times. We’re able to run them in parallel and they are much more reliable. We hope this gives you some ideas on how to test your own PowerShell Universal environments.

Continue the conversion on the Ironman Software forums. Chat with over 1000 users about PowerShell, PowerShell Universal, and PowerShell Pro Tools.